Okay but As a native north carolinian and a csi enthustiast, my only true beef with this and any other cinematic representation is… NC doesn’t require front plates. Just the rear. So when people filming over in cali are all like, yo, pop this NC plate on the front bumper, I just… i just lose it. From a continuity standpoint.

The csi zoom screw passes the vibe check for me, but the NC front plate does not lol

I mean jeez looking for procedural realism in modern crime shows is a lost cause.

There’s gonna be a day when people don’t understand this anymore. Considering the resolution phone cameras have now, it may not even be that much longer.

I’m wondering if AI can already solve this. I’m not even some crazy AI fanboy, I’m just thinking about the possibility of predictive AI being able to interpret compression artifacts to determine what forms would collapse into a particular pattern.

deleted by creator

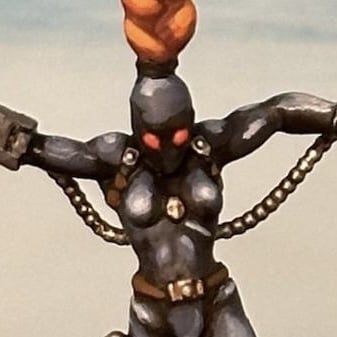

Calm down there, you’re starting to sound all inquisitive & such. Like that creamsicle lookin’ fella from the show.

No, but AI can be used to make up “evidence” and falsely convict people through “science”, “technology”, and “math” that people don’t understand but assume is correct because computers or something.

This already happens. See the Samsung S23 Ultra Moon picture marketing that turned out to be a lie.

There have been upscaling AIs for a few years, which can take a blurry picture and then e.g. guess that some pixels are probably hair, so it’ll swap those out for a custom rendered version of hair.

Sometimes that works well, but you often still have Uncanny Valley stuff going on. I also certainly don’t feel like they’re better at actually interpreting low-res images than humans, not in their current state.

And well, it should also be noted that if you prime such an AI with an image of the suspect, it will absolutely find a way to make a blurry mess of pixels look like that. So, it certainly shouldn’t serve as the only evidence.

Answer: A ML-model just makes it the fuck up